How Cyber Criminals Exploit AI Large Language Models like ChatGPT

August 24, 2023

Artificial Intelligence (AI) has become a popular topic recently with the launch of ChatGPT and Bard. In this blog, DarkOwl analysts explore how it is being used by cyber criminals.

Criminal discussions around AI chat bots like ChatGPT do not discuss creating new AI systems from scratch, but rather building from current language models and finding ways to by-pass ethical standards around prompting. Cybercriminal applications of ChatGPT and other AI applications are still in their infancy and our assessment will continue to evolve as the technology and its varying applications evolve.

Despite increased media coverage of fraudster AI chat bots like WormGPT, FraudGPT, and DarkBard, there is skepticism within both the underground cybercriminal community and the threat intelligence community that the output from these fraudulent chatbots is effective as it still appears to be rudimentary. While services like WormGPT and FraudGPT can be effective for generating phishing campaigns, we have also observed darknet users discuss ChatGPT in a non-criminal manner such as automating pen testing tools.

Jailbreaking ChatGPT

DarkOwl analysts searched our Vision UI database and found over 2,000 results mentioning “jailbreak” AND “GPT” across various darknet forums, marketplaces, and Telegram channels. The number of results returned for this search was significantly higher than when searching for “WormGPT”, “FraudGPT”, or “DarkBard.” We have recently observed discussion of “jailbreaking” ChatGPT to by-pass ethical standards around prompting to engage in various activities being discussed in various formats.

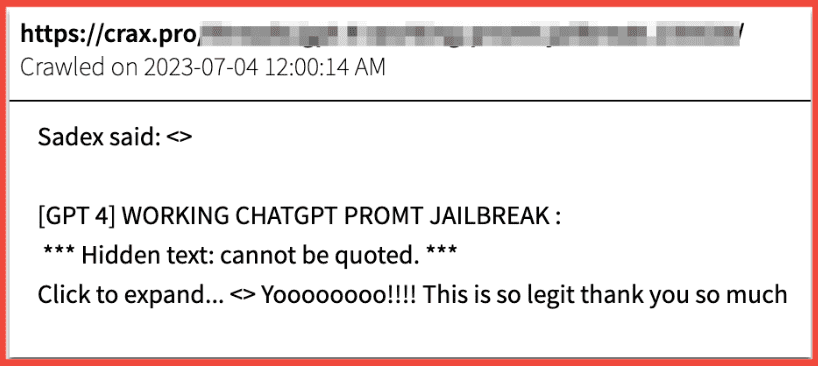

One example, as seen in figure 1 below, is from the hacking forum called, Crax.Pro, where a user titled a thread as, “[GPT 4] WORKING PROMT JAILBREAK.” The user, Sadex, initially shared a link to a video tutorial allegedly instructing one how to “jailbreak” the prompt for GPT 4. Other users commented and validated that the video tutorial was effective, claiming: “Yoooooooo!!!! This is so legit thank you so much.”

Figure 1: Source: DarkOwl Vision

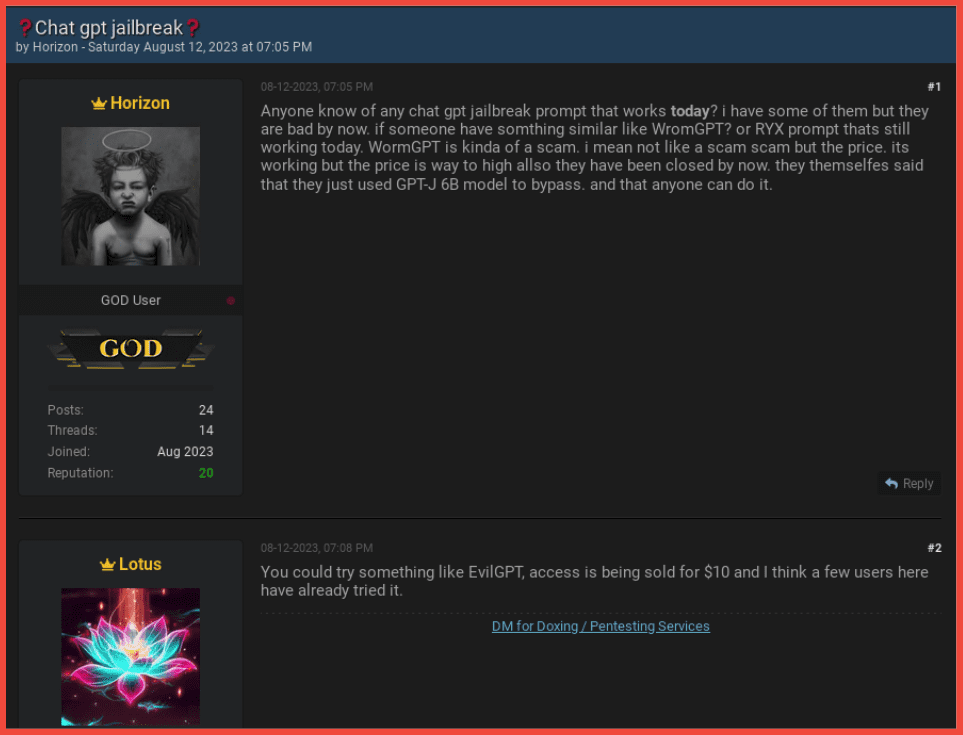

In another example, a Breach Forums user inquires how to jailbreak ChatGPT and claims tools like WormGPT are a scam. While another user suggests using a fraudster chatbot service called EvilGPT, which is similar to FraudGPT:

Figure 2: Breach Forums users discuss jailbreaking ChatGPT

DarkOwl analysts have also observed members of the extreme right-wing militants in the United States discuss jailbreaking Chat GPT to bypass “censorship.” One Telegram group chat shared links to a video tutorial of for jailbreaking ChatGPT:

Figure 3: Telegram users discuss jailbreaking ChatGPT; Source: DarkOwl Vision

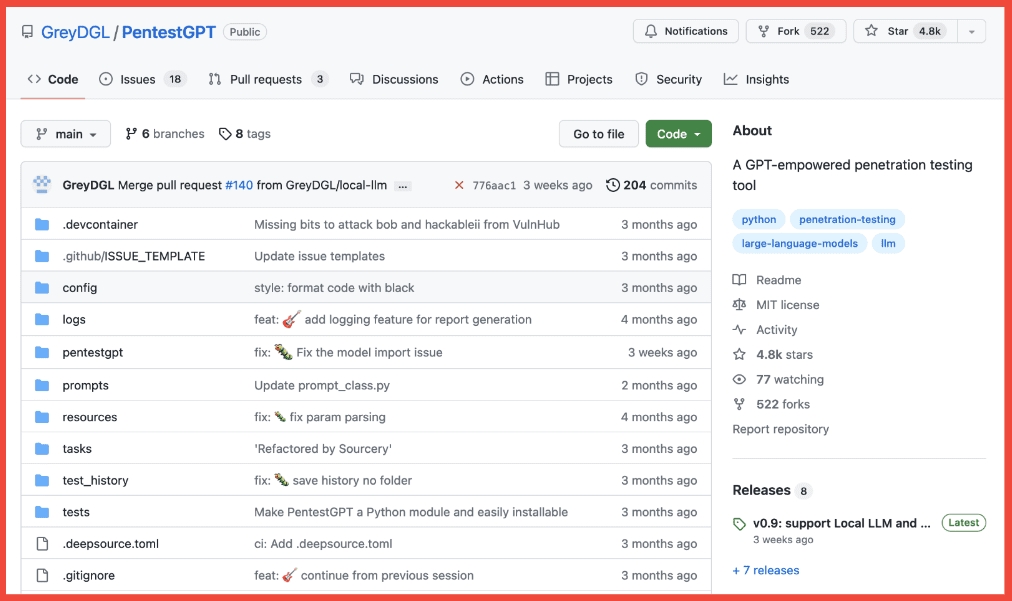

However, DarkOwl analysts have also observed the underground community discuss bypassing the ethical standards around GPT prompting to automate pen testing tasks. One GITHUB repository is called GreyDGL/Pentest GPT. PentestGPT describes itself as, “A penetration testing tool empowered by Large Language Models (LLMs). It is designed to automate the penetration testing process. It is built on top of ChatGPT and operates in an interactive mode to guide penetration testers in both overall progress and specific operations.” PentestGPT is like WormGPT in that both are building off previously created language models.

Figure 4: Above screenshot taken from the Pentest GPT Github repository

Fraudster Chatbots Exchanged on Darknet Marketplaces, Forums, and Telegram

FraudGPT

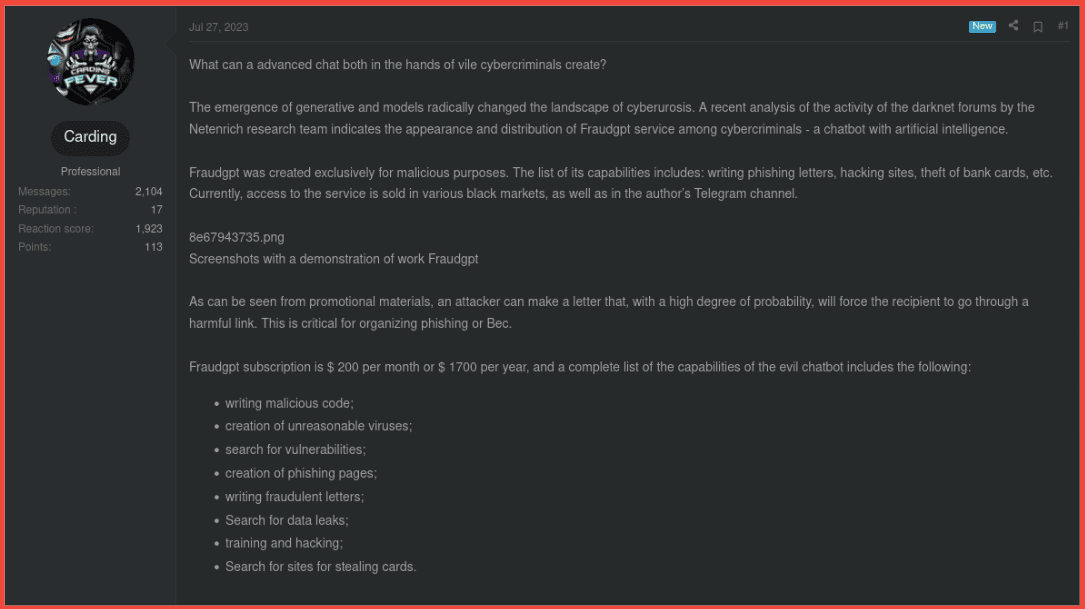

Fraud GPT is an AI chatbot that uses popular language models created by Google, Microsoft, and OpenAI and strips away any kind of ethical barriers around prompting the AI. Thus, tools like FraudGPT are commonly used by fraudsters and cybercriminals to generate authentic looking phishing emails, texts, or fake websites that can fool users into sharing PII.

A recent advertisement on carding forum Carder.uk was allegedly selling a FraudGPT service for $200 USD monthly or $1700 USD annually and includes the following capabilities:

Figure 5: Carder UK user advertising the FraudGPT service

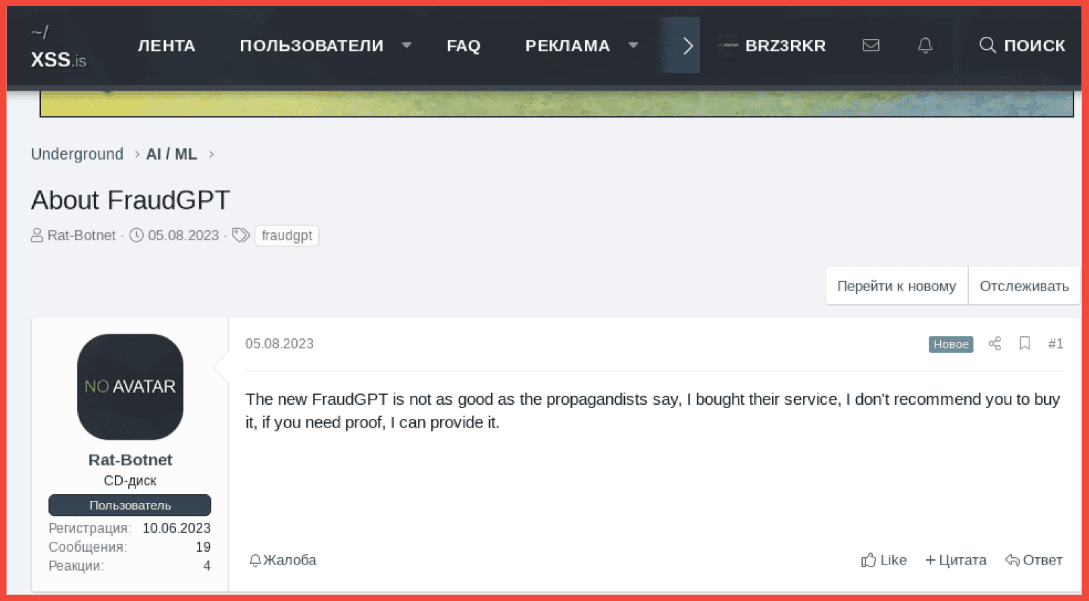

Despite the proliferation of fraudster chat bots being sold on darknet forums and markets, some users are skeptical of the price of tools like FraudGPT. In the below screenshot from the predominantly Russian speaking cybercrime forum, XSS, a user discourages others from purchasing FraudGPT as recently as 8/7/2023 and claims to be able to provide proof as to why the service is ineffective:

Figure 6: XSS user criticizes the effectiveness of FraudGPT

WormGPT

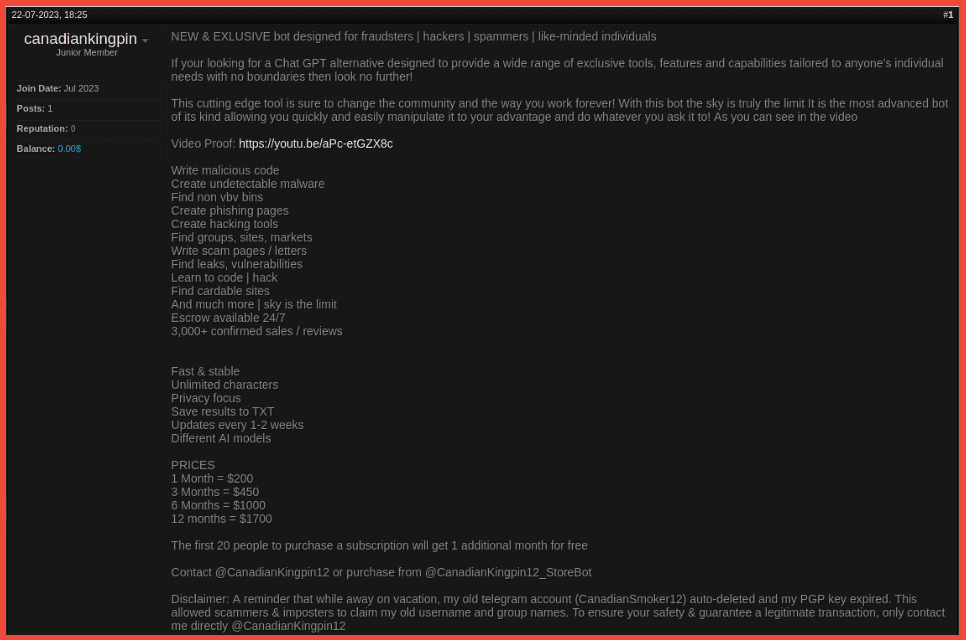

WormGPT is an alternative fraudster chatbot originally discussed on Hack Forums in March 2023. It only recently started being sold on various darknet forums and marketplaces as of June 2023. Recently, the 2021 GPT-J open-source language model was leveraged for creating this hacker chatbot. WormGPT reportedly writes malware using Python. The moniker, CanadianKingpin12 (also previously known as canadiansmoker), has been observed selling access to WormGPT across various cybercriminal forums and marketplaces.

Figure 7: CanadianKingpin12 advertisment on Club2Crd carding forum

The above screenshot shows the user, CanadianKingpin12, selling the FraudGPT service on a well-known carding forum called crd2club.

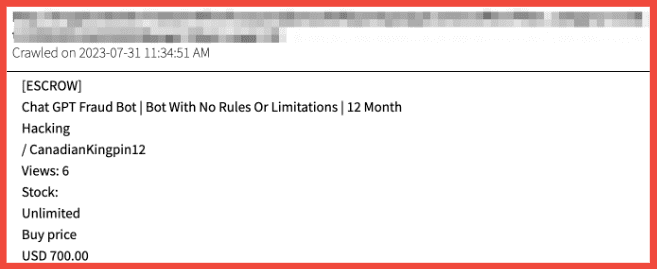

CanadianKingpin12 has recently gained quite a bit of attention in the media for their involvement in advertising GPT fraud services (FraudGPT, WormGPT, DarkBard, DarkGPT) on various forums and markets, such as: Club2Crd, Libre Flrum, Sinisterly, Kingdom Market, for Chat GPT, Fraud Bot and Worm GPT. The following screenshot shows CanadianKingpin12, selling 12-month access to a ChatGPT Fraud Bot for $70 USD on Kingdom Marketplace.

Figure 8: CanadianKingpin12 selling Chat GPT Fraud Bot on Kingdom Marketplace – this post was removed from the actual marketplace; Source: DarkOwl Vision

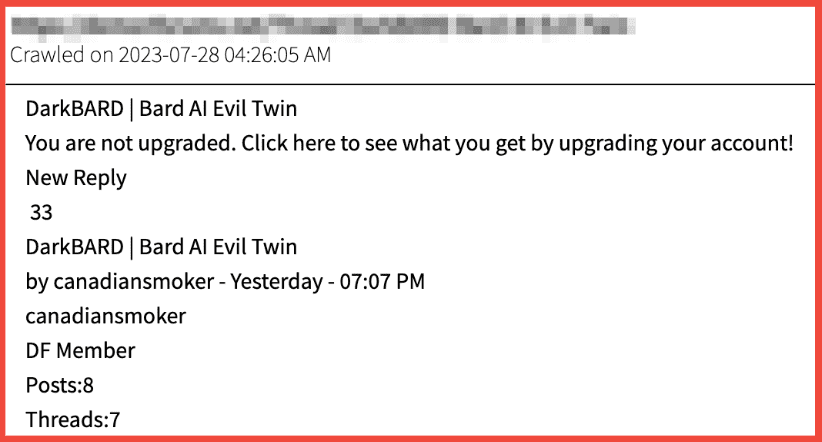

DarkBard

DarkBard is yet another alternative fraudster chatbot, but less popular than those mentioned above, that is also being sold by CanadianKingpin12. The following screenshot shows CanadianKingpin12 selling access to yet another fraudster AI chat bot, DarkBard, for $100 a month on the hacking forum called Demon Forums.

Figure 9: canadiansmoker (aka CanadianKingpin12) selling DarkBARD on DemonForums; Source: DarkOwl Vision

Conclusion

CanadianKingpin12 is also tempting users with “DarkBART” and “DarkBERT” advertisements. Purportedly, these tools trained completely on Dark Web lexicons will be more sophisticated than the aforementioned bots and can also integrate with various Google services to add images to output, instead of offering text only output. Researchers also anticipate eventual API integration, further fortifying and automating cybercrime efforts. DarkBERT is also the name of a benign LLM developed by Korean researchers. CanadianKingpin12 claims to have access to this LLM, using it for the foundation of the malevolent tool. DarkOwl analysts are unable to verify these claims, as South Korea claims DarkBERT is only available to academics.

As AI emerges, its use cases, both legitimate and criminal, will continue to evolve. This is the nature of technology – as tech emerges, so too do legitimate and fraudulent use cases. Companies must start a proactive response to newly generated fraud and scams powered by AI, chatbots, LLMs, and anything else that eases the barrier to entry for cybercriminals to attack.

Interested in learning how darknet data applies to your use case? Contact us.

Explore the Products

See why DarkOwl is the Leader in Darknet Data

Products

Services

Use Cases