[Webinar Transcription] AI vs AI: How Threat Actors and Investigators are Racing for Advantage

October 14, 2025

Or, watch on YouTube

During this webinar experts Jane van Tienen (OSINT Combine) and Erin Brown (DarkOwl) explore the evolving role of artificial intelligence in investigations and how it is transforming investigative workflows, the ethical challenges it presents, and how threat actors are exploiting AI for phishing, deepfakes, fraud, and propaganda. Learn why keeping the human in the loop is essential and how to build resilient, AI-aware intelligence practices.

NOTE: Some content has been edited for length and clarity.

Kathy: And now I’d like to turn it over to Jane, Chief Intelligence Officer with OSINT Combine, and Erin Brown, the Director of Intelligence and Collections with DarkOwl, to introduce themselves and start our discussion.

Erin: Thanks, Kathy. So yeah, we’re going to jump right in because as Kathy mentioned, we’ve got a lot of content to go over, but we’re just going to start with a brief introduction to who DarkOwl are, and OSINT Combine.

I’m just going to give the brief background on DarkOwl. As Kathy mentioned, my name’s Erin, I’m the Director of Collections and Intelligence at DarkOwl, so responsible for the data that we collect and also the investigations that we conduct. DarkOwl has been around since early, well, Vision since 2014, I think we’ve been around since 2012, and we primarily collect data from the dark web, from forums, from marketplaces, from Telegram, from Discord, and other sources where we’re seeing kind of what threat actors are talking about, what they’re selling, and some of the trends out there and making that data available to our customers. And if anyone has any further questions on DarkOwl, I’m sure Kathy can share some more information, but with that, I’m going to hand over to Jane.

Jane: Thanks very much, Erin, and thanks, Kathy, as well. I’m really pleased to join you here on the webinar today, so thank you for inviting me to come along. So, good afternoon, everyone. My name’s Jane van Tienen, and I’m the Chief Intelligence Officer for a company called OSINT Combine. I’ve spent a career in intelligence, predominantly national security and international intelligence diplomacy, before more recently moving into open-source intelligence.

I’m assuming that most people on the call would probably know what open-source intelligence or OSINT is, but just to ground truth it, it’s intelligence derived from publicly available or commercially available information, rather than classified sources.

Today, Erin and I are going to be talking all about artificial intelligence, of course, but not just because of the way it enhances our capabilities of investigators and intelligence professionals, but also because of the capabilities of the bad guys that we investigate. But before we delve into that interesting topic, just a little bit more to touch on this slide here about OSINT Combine. We are a proud partner of DarkOwl. OSINT Combine is a global company, we’re US-owned, but Aussie-founded so, Australian-founded and veteran-operated. And we’re all about helping build enduring OSINT capability, which we do through our AI-enabled OSINT collection platform that’s called Nexus Explorer, our foundational and advanced open-source intelligence training, as well as thought leadership.

And so, our focus on building enduring OSINT capability means that our company is more than just about giving people great tooling, although, of course, great tooling is important, but we feel really passionately about making sure that people are able to use the tools, understand the tradecraft to operate effectively, safely, and ethically in their work. We work with clients similar to DarkOwl, actually, ranging from national security agencies through to global banks. And that means that we’re seeing OSINT practices, as well as increasing AI adoption up close in different kinds of workplaces.

And we’re sort of getting insights, therefore, into what’s working, what’s kind of breaking or tricky, and where practitioners and leaders are struggling in relation to these issues.

Before we get into the actual thick of the webinar today, I wondered if there might be an opportunity for us to do just a quick poll in the chat there, just to give us a sense about how many of you are already using AI in some form as a part of your workflow. I was going to see if I can have a peep in the chat while we do that. If there’s anyone there already using AI as a part of the workflow. And let’s go on to the next slide while people might consider that there, Erin. Thank you.

So, my point in asking that is really to observe that for many of us, AI isn’t really a future concept anymore, is it? It’s already embedded into a lot of our investigation’s workflows, whether we’re working law enforcement or intelligence investigations or even corporate due diligence. And really, it’s the necessity that’s driving that adoption. Every day, practitioners are using AI really to expand the human capacity for things, for all sorts of things, actually, like language translation, rapid entity resolution, network mapping, pattern recognition, even brainstorming alternative scenarios, which I really enjoy using AI for these days, as well as summarizing vast volumes of content and doing all of that within minutes.

In that context, particularly at, say, a government level here in the US, but also across allied governments, so think Five Eyes, as well as NATO member states, we’ve already seen some pretty strident language and strategic choices about how AI should be embedded into intelligence workflows. And that’s probably most prominent when we’re thinking about open-source intelligence workflows. A great example is here in the US in defense strategy, where we’ve heard, OSINT being referred to as the INT of first resort.

And of course, we know that when it comes to private industry, OSINT really is the INT of only resort. And so, I think that’s important to observe, because oftentimes, you know, the increased utilization of OSINT also means hand in glove, the increased utilization and exploration of AI and AI augmented workflows. So, the point being that regardless of sector regional budget, really, our debate now has moved far beyond should we use AI to more about how do we use it wisely?

So, for investigations and intelligence work, we’ve always needed to ask critical questions, haven’t we? And those critical questions and those fundamental skills of tradecraft really haven’t gone away. But in an AI augmented workflow, regardless of purpose, the scope of those questions has absolutely expanded. And so, in understanding how to use AI to greatest effect, analysts and investigators must now not just interrogate the content or the information that they derive, but also the machines that help produce it.

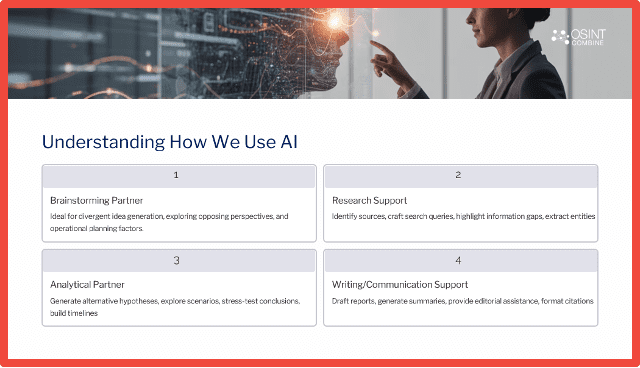

And so, these areas on the slide, Brainstorming Partner, Research Support, Analytical Partner, Writing and Communication support, these are areas where OSINT combined through our work, we’re most commonly seeing AI being utilized as a part of OSINT workflows in various workplaces today. And indeed, the role of AI will continue to expand as technology evolves, no doubt.

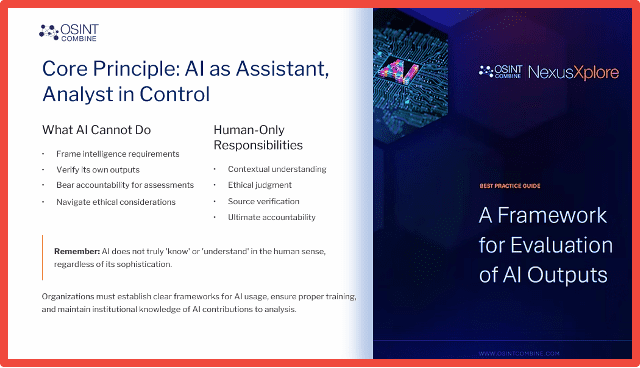

I think the key issue is, though, that when deciding when to use AI in your work, the consideration really is about, you know, the accountability in decision making, and who owns the accountability in the decision making, because that is you, because it is always a human issue. It’s not to be, you know, for the machine. So, it doesn’t really matter at the end of the day how advanced our tools become. We cannot, in fact, must not remove the human from the investigative workflow. And so that’s what we mean when we say the phrase, keep the human in the loop, which we’ll be speaking to a little bit further in the presentation.

We have to remember that, as good as AI might be in any given moment, there are always going to be things that it cannot or should not do. And sometimes those boundaries are determined by governance frameworks that might exist in your organization or even your community of interest. We know that investigations and intelligence work, it lives and dies by its credibility. And so, no matter how the advanced tools we use, how great they are, our assessments are only really going to be value if they’re trusted by those who rely on them. And so, the challenge is really one where rather AI can overwhelm with lots of different plausible outputs that can actually bypass some of the analytical tradecraft or critical thinking that we might apply otherwise. And so, when we receive an AI output response, the trouble is that it can look right, but it doesn’t always mean that it is. And so, within OSINT combined, we’ve been investing a lot of thought, time and effort into how to most soundly incorporate AI into OSINT workflows, understanding what it can and cannot do, and know when to trust AI and when to challenge it. And it’s important that you do so as a part of your own investigative and intelligence products and to maintain your operational security online. And I’ve got an example of one of those resources that is freely available to download there on the slide, more to come on that.

If we look at the pros and cons of AI as it stands at the moment, I think these are fairly accepted in our industry and our collective work. And so there should be no surprises there, and I’m not going to go through every one of them. Some of these we will absolutely be showcasing in various means throughout the webinar.

But to pull the thread on one of these things in the Cons column there, which is a bit of a passion project of mine, if you like, and it pertains to role clarity, which is something that we don’t talk about as often as I think we should in this regard. And so, what I mean by that is that analysts, team leaders, decision makers, even boards, you know, each role in the decision-making chain or in the chain of command, if you like, really interacts with AI differently. Using AI to best effect isn’t really about only a practitioner level AI literacy or fluency, but it’s about the capacity of others as well as the organization and organizational system to understand it.

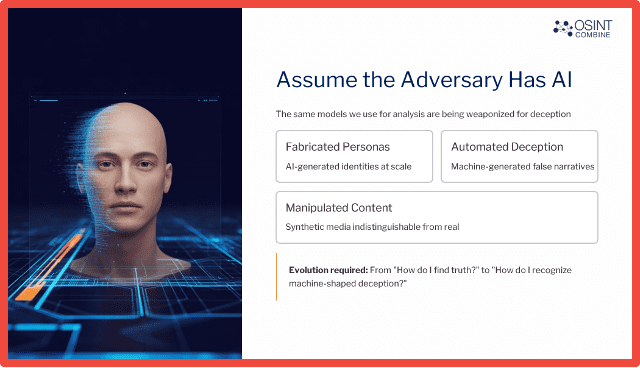

I think one of the most dangerous assumptions that we see in investigative work is this issue of mirror imaging, which is both believing that adversaries think and act like we do, as well as the fact that they don’t have the access to the same technology as we do. Unfortunately, not only do they have access to technology, the same as we do, but they also have a willingness to operate outside our own ethical and moral compass.

This is something not to be underestimated when we need to consider AI. The same generative models that we use to draft reports to identify patterns or detect anomalies are going to be used by criminal and extremist actors to fabricate personas or automate deception and manipulate narratives at scale. I think the real trouble is that AI makes generating some of these artifacts pretty trivial in some cases. And so, our tradecraft is really evolving beyond how do I find that needle in the haystack or how do I find the truth to now also include how do I recognize what’s been machine shaped to look like the truth. And that’s a really hard nut to crack.

Erin, I wonder if we might hear from you now about some of the examples that you and your team are seeing sort of in the wilds out there, just to illustrate some of these points.

Erin: Yeah, thanks very much, Jane. As Jane has mentioned, we hopefully are all using AI as part of our workflows and investigations. But you know, the criminals, the terrorists, extremists are definitely using AI as well.

I’m going to run through kind of a couple of examples that we’re seeing of those using that technology.

But I think one of the key things that I want to start with is so far, at least I think in what we’re seeing of threat actors using AI, is they’re using it in the same way that we all are too, in that they’re using it to increase productivity, improve the output of what they’re working on. But it still requires that human intervention, right? And they still need to do things as a threat actor and have some experience.

You know, even if we’re talking about them using, vibe coding to create malware, they need to have a basic understanding of coding and how they do that to be able to do that effectively. So at least thus far, we’re just seeing them using it to enhance the types of attacks and operations that they were already doing. With I guess the one caveat to that being, deep fakes and the way that they’re developing and how good generative AI is at producing images and speech now is definitely becoming more and more of a problem.

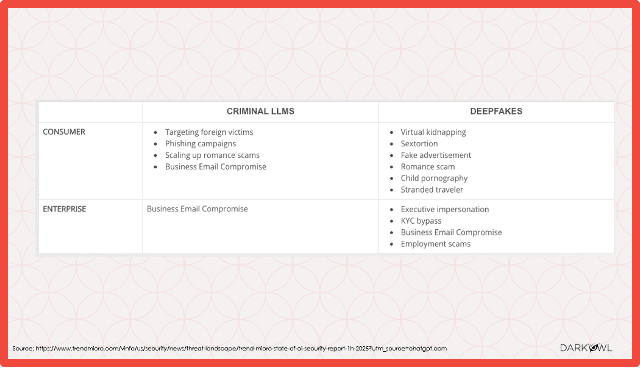

But let’s dive into some examples of how exactly they are using AI. And I stole this from a Trend Micro report, but I think it nicely maps out kind of the different attack vectors and vulnerabilities that criminals are going after in terms of deep fakes but also using their own LLMs. And we’ll talk about that in a little bit more detail.

And we’ll go through some of these examples in more detail too. But, you know, things like business email compromise and creating more sophisticated and believable phishing emails is something that we’ve seen go on the rise, but also, you know, business compromise in terms of spoofing CEOs or executives through their voice, through their images, through Zoom calls, things like that is definitely on the rise. We’re also seeing, you know, more targeting of foreign victims. I think, gone are the days of the Nigerian prince with language that you don’t really understand, and you can tell quite quickly that it’s fraudulent just because of the fact that a native English speaker hasn’t written it. That’s not really happening anymore because they’re using AI to translate their messages and to create those images for them. We’re also seeing an increase in things like romance scams, sextortion, CSAM, unfortunately, and virtual kidnappings and things like this. So, using AI and what we would maybe traditionally think as the cyber realm for more real-world effects. And some of those are having really awful consequences on a lot of people. And so, something that we all need to be kind of aware of and how to deal with.

I mentioned there are criminal versions of LLMs. These are based usually on the, you know, open source or other LLMs that we’re using out there, things like ChatGPT that have been made freely available. But they’re basically getting rid of the guardrails that these companies have put in place around this AI to try and combat the technology being used for nefarious purposes.

WormGPT is one of the models that came out fairly early. I think it’s been around for a year or two now. And this is taken from a darknet web page where they’re advertising it. And one of the interesting things and one of the reasons I wanted to raise this is you’ll see that they’re advertising it very much in the same way that, you know, OpenAI or PerplexC or those other, you know, ethical companies, I hope, are kind of putting this out there. So, they’re telling you it’s a game-changer, you know, what it does, how it can help you.

It has pricing plans. You can get different plans depending on your expertise and kind of what information you want to use it for. And then you can see that they’ve got it on the command line as well. So, they’re able to see it. They call it the biggest enemy of well-known ChatGPT. And it allows you to kind of do all of those malicious things without the guardrails that you will get in those more legitimate services. So WormGPT is one.

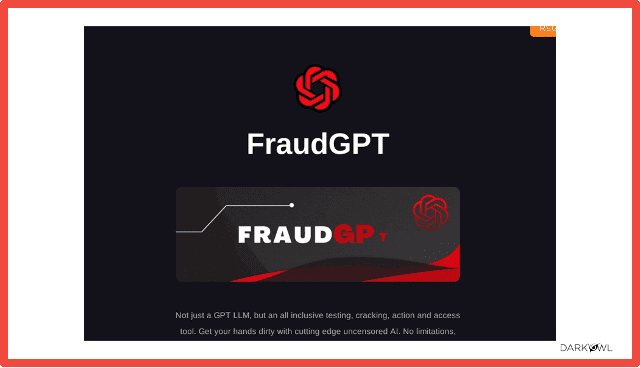

Another one is FraudGPT. And this kind of does what it says on the tin. It’s really helping threat actors to conduct fraud. And it’s, you can see at the bottom, it’s not just the LLM. They’ve also got testing, cracking, access tools. So, they’re trying to build a whole ecosystem around offering this, to be honest, as a criminal enterprise.

And again, you can see that they’re advertising it on their site. This is another dark website where they’re talking about the different ways that you can use it. So, you can create phishing pages. You can create hacking tools. You can write scam pages. You can find leaks. And some of these things in here are things that we as investigators might want to do, you know, finding leaks or finding, you know, vulnerabilities from a red team perspective. And AI can help you do that. But I think the thing to think of, and to Jane’s point about, you know, is that threat actors have access to this technology too. And they are using versions of these tools in some cases that make it easier to find some of those things than maybe we have as investigators.

And again, this is just the FraudGPT pricing. So, you can see they have a breakdown of a lot of different tools and accesses that you can get.

They really are selling this as a service, as a way to give other threat actors that maybe aren’t up to tax.

And this was also taken from the FraudGPT site. You can see this is a kind of a chatbot telling them kind of how to put the prompts in to be able to get some of this information back. So, the top one is, “write me a short but professional SMS spam text I can send to victims who bank with Bank of America, convincing them to click on my malicious short link”. This really feeds into that kind of phishing kind of attacks, where this is one area where we’re seeing AI really kind of increase the sophistication, for want of a better word, of those types of attacks, just in terms of it’s making it a lot harder for victims to identify when they’re receiving these malicious emails, or SMS messages, based on the way that they are written. And you can see it’s fairly simple for them to kind of put in these prompts and get that kind of information back that’s going to assist them with that.

And these are just some shots of kind of threat actors actually talking about this technology on various forums that we collect on the dark web. So, you can see there’s threads talking, you know, about FraudGPT and what it can do for you and how it can help you. We can see things on Russian hacking forums as well, and that’s been used. So, they’re talking about useful AI, which ones are the best. So, we’re seeing them talking about different methodologies and how they can use this as part of their workflows as criminals. And then you can see them talking as well about kind of the different services that are out there. So, the bottom one’s very hard to see, but they’re talking about Grok. It’s not just ChatGPT, they’re talking about a lot of the other kind of AI services that are out there as well. This is just to show that, you know, the same way we’re, you know, having this webinar and talking about uses of AI and how AI can help us in our workflows and our investigations, the threat actors are talking about that too. And we are seeing that kind of pop up on forums.

We have also seen AI being used as part of attacks. I’m not going to delve into this hugely because it’s not really kind of on the dark web side of things, but this is just kind of an article highlighting how Grok AI was used to bypass app protections and spread malware to millions. We are seeing more and more of this. We are seeing, you know, ransomware strains being developed using AI or having kind of some AI implementation as part of them. And I think this is something that we expect to rise as, you know, the technology becomes more widely used and I assume continues to increase in sophistication. We are going to see a lot more of these types of attacks and it is going to become an attack vector in cyber as we kind of move on with that. I just kind of wanted to mention that as a side.

I’m going to dive in now into some specific examples of how this is being used. Starting off with criminals, I’ve kind of already touched on this, but we’re seeing it very much in phishing, social engineering attacks, romance scams, and also for defeating KYC to get into kind of financial fraud.

We’ll go through those in a little bit more detail. This is an example of an advertisement on Telegram. This is a service where they are offering an AI face builder. It will create a unique face and then you can use that for whatever you need. So, this is being used, we’ve seen this being used for defeating KYC.

You can see you’re swapping faces on photos and videos so that you can look like you’ve got your ID card. For those organizations where they ask you to take a picture of yourself with your ID, this is kind of helping them to kind of combat those checks and balances that are put in place. But we’re also seeing these kind of face builders and generators being used in sextortion as well, and I’ll kind of touch on that in a bit. But you can see kind of how this is part of the business that they’re offering. You can get a tutorial; they give you kind of free services to start off with to test it. You can do bulk processing and purchasing credits. So, it is kind of interesting how they’re using this going forward.

This is another discussion on a dark web forum talking about fraud GPT, but I highlighted it here because it’s saying this is what it’s going to help you do. It’s going to help you write phishing emails, develop malware, forge credit cards. These are the types of activities and crimes that are being posted as AI will be able to help you to conduct these types of crimes.

This is also another news article that I came across in terms of them using deep fakes to spoof a celebrity. The individual that was spoofed is an actor in a US soap opera.

His videos were generated and being sent to a woman based in California, and he was able to scam several thousand dollars out of that individual by asking for money and kind of creating a relationship with this victim by pretending to be this famous soap actor.

This one I don’t think did have a romance angle, but this is very much how romance scams can be operating with the use of AI as well in terms of them generating fake videos of fake individuals or pretending to be a celebrity, impersonating their voice, but obviously getting them to say things that they would never say and targeting individuals to get them to send them money, usually via cryptocurrency. And there has been a huge increase in this, and a lot of celebrities are being targeted in terms of their likenesses being used via social media to target victim to get that financial fraud out of it. And I don’t actually have the video to play here. This is a screenshot. But if you see any of these videos and to Jane’s point about like how do you identify this information, they’re very realistic. It’s very difficult for people to identify that this might not be real, especially I think for some of those victims that might be more vulnerable and not as savvy to be open to this technology, but also these kinds of attacks.

These are some more advertisements from Telegram, but this is more related to social engineering services that they’re providing. So Purple on the right, you can see that they’re doing call protection, but they’re generating ultra realistic voices via AI. They’re offering different tones, male, female, neutral. And they’re using these voices to spam people basically to have these calls to try and get people to hand over their money. They’re providing this as a service to people so they can use these different voices to scam unsuspecting individuals. So, you know, it isn’t, I think when we think of phishing, we tend to think of emails or maybe SMS messages, but I think more and more phone or video messages are going to become more of an issue with the advent of AI.

On the left-hand side as well, this is kind of more of the business email compromise where they’re kind of talking about all the different ways that they can make sure that an email campaign would be successful, including AI powered optimization. And I think to go back to, you know, it’s the same way, you know, that we’re using this in our everyday life, the criminals are using it. I mean, you could have an SEO marketing company that’s kind of saying the same thing to businesses that want to kind of advertise their services. But from the threat actor side, if you put the different slant on it, they are using AI and customizing email addresses to make sure that you can spam people more successfully and conduct those financial crimes. It’s interesting how it’s being used in a similar way, but, you know, with a lot more malicious intent than the rest of us would be using it.

Moving onto sex related crimes, I think this is a really important one and one that people don’t always necessarily think of or sometimes think that there isn’t a victim if it’s AI generated, but that’s definitely not the case. I think the main areas where we are seeing AI being used is child sexual abuse material, CSAM, and generation images relating to that, Human Trafficking and Sextortion and Romance Scams.

To highlight the AI generated child sexual abuse material, you know, Europol have made arrests quite recently related to this and put out information about it.

But a lot of people are using AI to generate fairly real looking videos depicting CSAM. And there are still victims in this because the individuals that are watching this material may go on to also target children in the real world, but also, they need to train these models and create these images based on something. And so, there are children that are still being victimized by this kind of activity, and it is making it more prevalent.

It’s something that I think is really important that we are able to stop. And it is becoming, you know, more and more sophisticated. And I think this quote from the IWF, Internet Watch Foundation, is probably a little bit out of time now, but saying that, it has progressed at such an accelerated rate that they’re very realistic examples of videos depicting this. And I think we are seeing those very realistic videos and images being distributed across the dark web and other sources at this time. It’s definitely something that obviously we need to stop.

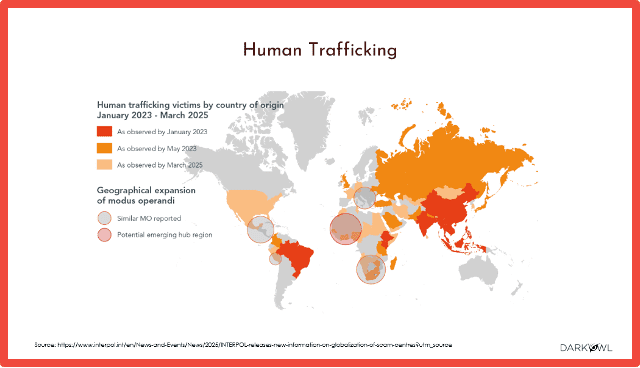

Human trafficking, I think people might not necessarily equate AI with human trafficking and see exactly how it’s working. This map actually just shows human trafficking victims across the world. It isn’t specific to AI, but I think I wanted to highlight kind of how much of an issue human trafficking still is. This is from Interpol.

But also, in terms of how we’re seeing AI, it’s being used to generate fake job advertisements. So, kind of as part of that initial phase of the human trafficking of enticing victims in and generating material that’s going to make them think there’s a believable job or there’s kind of a believable activity that they want to be involved in and kind of suckering them into that whole industry. It’s also being used to bribe people in terms of generating false sexually explicit images for victims of human trafficking and using that to really kind of enforce the activity that’s going on.

And that brings us in the same vein to sextortion. In a lot of cases, AI is being used to generate images of individuals and then extort money from them. So basically, creating nudes or sexually explicit images of individuals, it’s not them, it’s AI generated, their face has been put on it, but threatening to share those images and say that they are real with their friends, with their family, with their colleagues. It’s really prevalent against young people using social media vectors, so things like Snapchat, Instagram, things where images are shared quite a lot but it is targeting people of all ages and it is targeting both females and males and it’s really you know an awful kind of practice there have been noted suicides of people that have been targeted by these types of sex distortion attacks. So again, it’s going back to how can people identify that these images aren’t real you know the victims feel that they look so real even though that they know that they’re not because they haven’t shared that material with them, that they’re so worried about this, that they are paying these people. And there are, unfortunately, fairly well-organized criminal groups that are kind of doing this on a rotation basis, trying to kind of build up these relationships with these individuals generating these images and getting this money from them. It is becoming a real huge issue, as I said, particularly among the younger generation.

We’re also seeing AI being used by terrorist organization and extremist groups. It’s primarily being used, I would say, for Propaganda, but also Disinformation as part of those propaganda campaigns and campaigns and putting a lot of that information out there. We’re also seeing them using it for Translation a lot to make sure that they can reach individuals in multiple countries to bring them into their extremist beliefs and also generating images, again, with propaganda and disinformation in mind. But some examples of that, this is taken from an ISIS chat group. You can kind of blurred out in the back of the ISIS flag, but it’s an AI-generated image on an article about building bombs. So, part of their propaganda, part of their education of individuals, they’re using AI to make this look kind of more believable and kind of draw in individuals. So that’s kind of one aspect we’ve seen.

This is another one that kind of looks you know, if you don’t know what to look for, but it’s Iranian terrorists claiming that they crashed a plane into Disney World in Anaheim. You can see the Disney castle in the background and the crash plane. I would argue the plane isn’t that realistic because planes don’t tend to crash backwards. But it’s highlighting that propaganda. It’s well kind of incentivizing people to go after these kind of targets. They’re putting ideas and people’s minds using AI of ways in which you could, you know, go about conducting attacks. And that’s something we need to be very mindful of.

This is a video that was put out with Hamas. So, Hamas talking, again, this was not a real video, but it looked like a news conference of Hamas leadership talking about the Israeli army and how they wear diapers because they’re stationed for so long and that led to generated images of you know Israeli forces wearing diapers which in some cases look quite authentic.

I mean I think most people would see this as a joke but obviously there you know there can be more concerning ways in which people about providing these kind of generated images. But to the point where they even had a TikTok video that was going around that went viral where an Israeli commander was talking about the nappy. So again, they were impersonating him and getting him to speak as if it was him to kind of try and back up the story that was put out there. And this is obviously all put out there to undermine Israeli from Hamas terrorist group. So, you know, it’s that disinformation. This one, obviously, I think most people would not believe, but they are putting things out there that are much more believable and it’s making it very difficult for people to understand what is real, especially in these times of kind of conflict.

And with that, I’m going to stop talking and hand it back to Jane.

Jane: Thanks, Erin. What you’ve demonstrated there in that kind of collection of examples is just the fact that, you know, AI, unfortunately, can increase the sophistication of a lot of bad actors really quickly. And so that can make our jobs, of course, really challenging.

So, we won’t necessarily do the poll now in the interests of time, but I’ll still talk through it because I think it’s interesting in the fact that, you know, when you reflect on these kinds of questions yourself, thinking about your own environment, whether, you know, your biggest challenges relate to some of the synthetic media that Erin sort of spoke about or perhaps it’s the scale of all of the things that you’re challenged with and in some cases even organizational readiness and maturity can pop up to being a big challenge for some practitioners and workplaces. But I think what is really interesting just to kind of emphasize your point there, Erin, is that this question really is one where the risks are kind of symmetrical in the sense that the same capability that helps us as practitioners, investigators, analysts, whatever in terms of automation and language generation, pattern recognition, it’s exactly what the threat actors are going to be using against us. And so, there’s an absolute need that we ensure that we have high levels of literacy when we’re kind of engaging in our work today. Because, AI itself, it’s not inherently malicious or benevolent, really. It’s what determines that is the outcome of its use and how well we govern it and verify and all of those kinds of things.

I think a lot of these are making things extremely difficult for practitioners and we can see a world where sometimes we might not we might simply not be able to verify whether something is true or not and that’s sort of the future that we’re looking at but at the moment we’re not quite there and so there are certainly some techniques that we kind of encourage you to consider Let’s have a look at the next slide, Erin.

I think one of the key things when at least OSINT combined when we’re talking about this challenge is that, you know, we really are talking about the analyst requiring stronger discernment, which references the fact that we acknowledge that AI gives velocity and capability in a way that perhaps, threat productors didn’t before have. But also, analysts must maintain this skill for validation and be the purveyors of veracity in as much as possible.

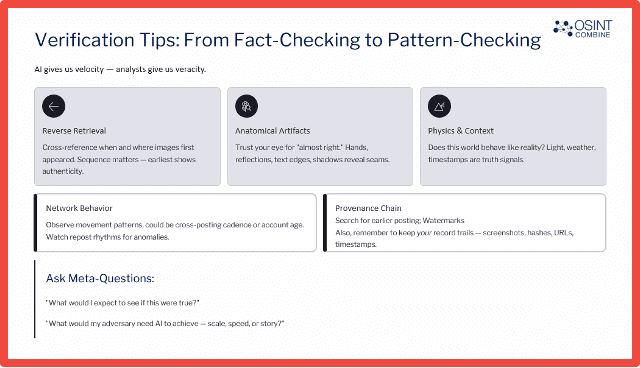

We think the most effective lens to kind of look at this is a multi-kind of modality kind of approach, if you like, that blends both traditional verification and analytical tradecraft with AI aware cues. And so, we acknowledge that this can be a difficult task, of course. Certainly, in some of those disinformation examples, Erin, that you provided, where analysts are going to be requiring to perform validation and verification, as well as potentially some really detailed content and metadata analysis. So, you’re adding on to your traditional analytical tradecraft tool sets around critical thinking and some of your analytical practices, you’re adding onto that some quite technical skills when it comes to sort of unpicking content and metadata analysis. But we think that it’s doable at this stage if you break it down. And so, we favor kind of practical steps and some guides for that process such as inauthentic content analysis maps which we’ve written blogs about that you can check it out on our website. And so, I’ve put some key examples there around anatomical artifacts and reverse retrieval and those kinds of things which of course are always going to be helpful. Providence Chain also super interesting for us when we’re kind of considering whether how something has proliferated online and where it was created and so forth.

But for me, I can’t get my head out of this space of the meta questions, and I think that’s got to do with largely my traditional intelligence training. And so, the questions that I always come back to in addition to some of these AI-aware cues are things like, “What would I expect to see if this were true?” And so that has me going to actually, look at some of the context, which is still super important to us. And the other question I like to ask when I’m considering the adversary is, “Well, what would my adversary need AI to achieve here – Would it be scale, speed or story?” And that really speaks to intent capability and, you know, the motivation factor, of course, which we always need they always need to maintain an eye on. But having the AI helping us out, as well as applying some of that human validation and verification activity is a real emphasis, I think, to ensure that the human remains in the loop. Really, we want our analysts to think critically, act ethically, and adapt intelligently alongside the machine that they’re working with.

There’s some available resources, all available to you, to download from the OSINT Combined website, and there are certainly more available. Let’s look at some key takeaways.

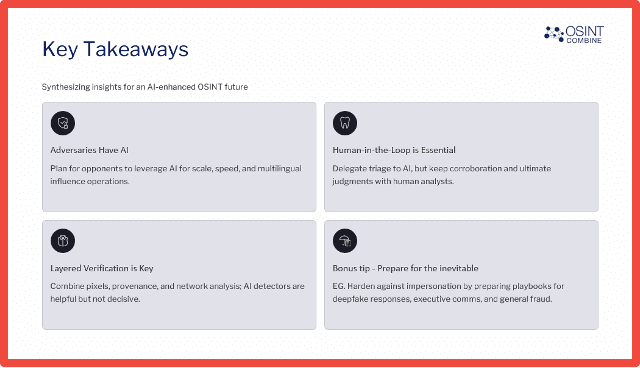

I think what we’ve been able to demonstrate today as a base of sort of numerous examples across different kinds of crime types and actor groups that absolutely adversaries have access to AI and they’re not afraid to use it. And they’re certainly, experimenting with it just as we are at the moment too. Human in the loop remains essential. We’ve discussed that. And there’s an importance there for layered verification. So not just trust in one modality over the other, but kind of really thinking quite deeply about, well, what are the different kinds of ways that I can speak to reliability, relevance, credibility, and consistency when I’m looking to verify information. And as a bonus tip, always thinking about, hey, some of these deep fakes, particularly the voice synthetic media that you identified, Erin, are becoming pretty sophisticated. And so, there is an element here to prepare for the inevitable in terms of preparing your organization to harden against impersonation and to prepare a playbook if you like about what happens if. And so, I think we can’t really avoid that.

I can see we’re at time. Kathy, I wonder if we pass to you and more than happy to take questions offline and respond to people if there are any, but over to you for final words.

Kathy: Sure, we do have a couple of questions that have come in. If you two want to go ahead and address them now, we can address the two that have come in and if any others come in, we can address those offline later if that would work.

Jane: Yes, I think that’s fine for us. I can see Erin nodding. So please, please fire away. And of course, if people need to drop off, they can, and they’ll received the recording.

Kathy: Sure. So, the first question is, how do you brief leadership when you suspect synthetic media but can’t prove it?

Jane: Yeah, we get asked that one quite a bit, Kathy and Erin, you might have thoughts on this too, but I think I still go back to this factor about you need to sort of explain confidence, not just certainty, to the leadership group and so that means about being really transparent about what you do know and what you suspect and what’s unverified and being open to being contested about that too. So, you know you have to sort of be professionally honest here. So, we want people to sort of show you know their reasoning how they came to a particular conclusion, could be you know to identify the anomalies and maybe even network behavior or some kind of thing that was flagged during the analysis. But I think it’s also really useful for leadership to sort of say, hey, if this is genuine, then here’s the impact, because that’s essentially what the leaders need to know is the impact so that they can act accordingly. And then vice versa, well, if it’s fabricated, here’s what, you know, we know that the adversary is trying to achieve against us. And so, both of those things are actually really important, I think, for all leaders to know about.

Erin: Yeah, I just add to that. I think I agree with what you’re saying, Jane, but I think just transparency, I think, you know, outside of AI, when we’re talking about intelligence and the things that we find, just because something is low confidence, or, you know, we haven’t been able to verify it with a lot of other sources, doesn’t mean it’s not something that should be shared and should be part of the intelligence package. So, I think it’s just making sure that we’re using those traditional kind of ways of how we do assessment and not doing anything different just because it’s AI.

Kathy: Great, thank you both. And kind of piggybacking on that a little bit. What’s your protocol for documenting AI’s role in your findings?

Jane: Yeah, I mean, I think it’s really important, Erin, and you were just sort of touching on it then, weren’t you? Like, just because we have AI now in the mix doesn’t mean that we’re going to be throwing the baby out with the bathwater when it comes to analytical and assessment tradecraft. All of that still applies, but we need to be professionally honest and transparent about when and how AI is being utilized throughout the process. And so actually, you know, in the US, there’s some strong guidance around this point for the US intelligence community, but OSINT Combine has actually, produced a best practice guide for citing AI to just for anyone. So, don’t have to be intelligence community, could be private sector, but really it’s about accountability through transparency is essentially it. And so, you want to be pretty transparent about how AI was utilized as a part of your assessment, what tasks it supported, where the output was validated, and where the human analyst made the final judgement. So typically, I see almost like a short provenance note or some kind of disclaimer in the methods section of analytical reporting now, that’s not uncommon. But we really need to be transparent to your point, Erin, earlier.

Kathy: Great. Thank you. That is all the questions that have come in to us right now, but we do have up on the screen contact information for both Jane and Erin, if anybody has further questions, or they’d like to reach out to us.

And I’d like to thank Jane and Aaron for an insightful discussion today. As a reminder to all of the attendees, we will be following up via email with a link to the recording and other resources. And we thank you all for joining us for this webinar and we hope to see you all again at another webinar in the future. Thank you.

Jane: Thank you.